This image is making its way around social media right now.

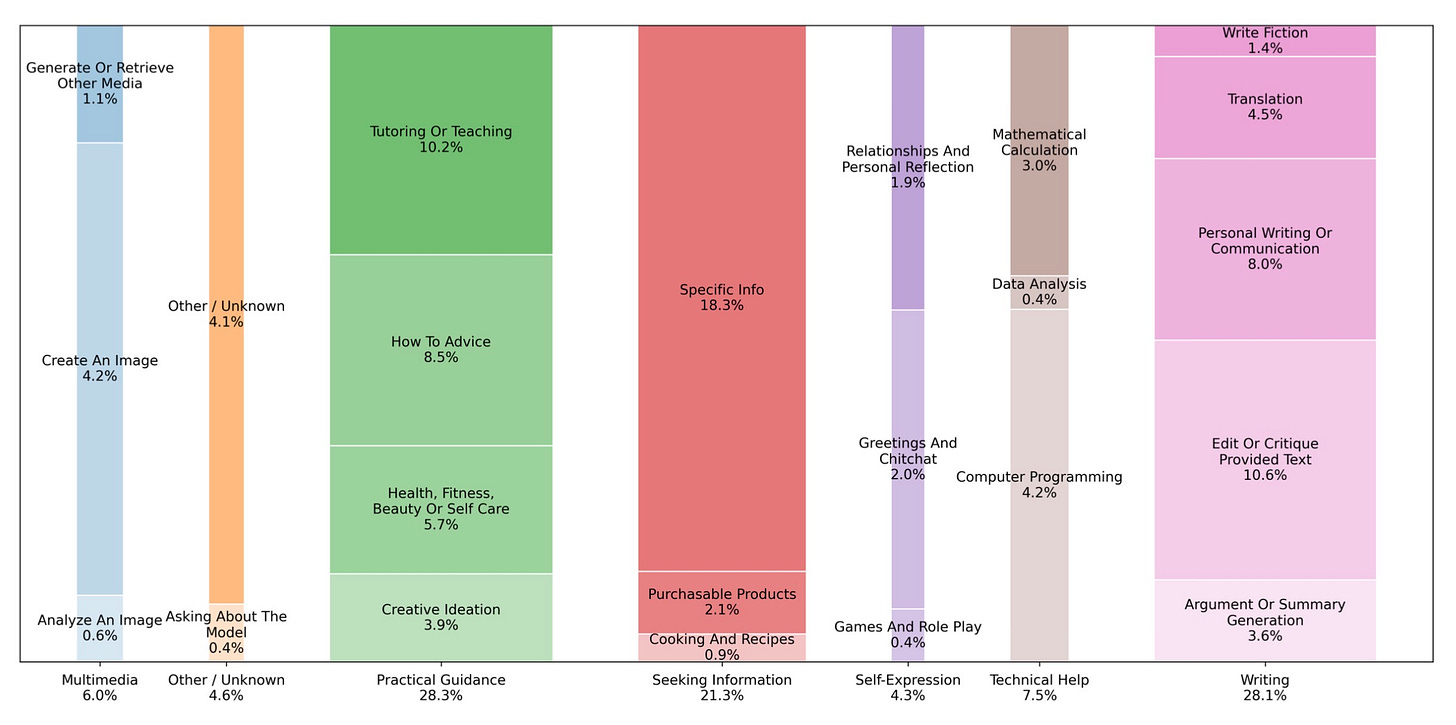

It’s pulled from a new usage study done in partnership between OpenAI and NBER. It breaks down how people are using ChatGPT, based on billions of interactions. On the surface, it feels and is being represented as definitive, like this how everyone is using AI in 2025.

And to be fair, that’s a logical conclusion. When data is backed by the weight of billions of inputs and formatted into a friendly chart, people tend to stop questioning anything related to it. The problem? Most of what I’m seeing in the headlines and social posts about this chart is noise.

Per usual, I shared my off-the-cuff thoughts on YouTube about the chart being interesting and telling us part of the story. However, the way many people are interpreting it is missing the point. That’s why I’m coming back to it here. This week, I want to take a little more time to refine my thoughts and properly frame my insights. Because, this report does tell us a lot, but what’s most important isn’t what’s immediately obvious. It’s hiding between the bars of that chart.

With that, let’s get into it.

Before we do, editor Christopher dropping in really quickly. As an update, I recently activated paid subscriptions. No pressure, but if you do find value in the work I do, would you consider financially supporting it? And, if a monthly ‘thank you’ is more than you’re comfortable with, you can also do a one-time thank you here. Appreciate the consideration, and with that, let us continue.

Key Reflections

“Noise isn’t harmless; it eats your attention. And, when leaders lose focus, they miss what actually matters.”

Reading the takes on this chart reminded me of more executive meetings than I can count. The ones where the energy is sky high, people are passionately debating, slides and charts filled with data are flying across the screen, but no one can actually explain the ‘so what.’ It’s the same here. Billions of prompts give the chart weight, and if you’re not careful, you can believe every slice of data matters. However, a lot of the conclusions are overstated or trivial. Most people don’t need to know writing prompts outpace technical ones by a few percentage points. It feels important because of the scale, but in reality, it’s just noise.

Now, it’s not just that it’s noise. Noise comes with consequences because it’s more than annoying; it’s exhausting. And, the more we let ourselves get swept into the churn of hot takes and micro-details, the less capacity we have to focus on the things that matter. Our attention is finite. When we burn it up chasing noise, we don’t have the energy left to sit with the harder, more valuable insights reports like this offer. The real danger is not just that we waste time on nonsense, but that the fatigue from the endless chatter dulls our ability to pay attention to what matters.

At the end of the day, leaders don’t lose clarity all at once. They lose it by inches, in meetings and headlines that feel important but mean nothing.

“AI data may slice people into neat categories, but that’s not how humans live or work. Nobody is using AI in isolation.”

In the last reflection I asked, “Who cares whether technical prompts edge out general inquiries?” I challenged the assumption because it looks like a clean insight, but that’s exactly the problem. Charts like this amplify our assumptions and misunderstandings about work and people. We already tend to oversimplify how work gets done. We love breaking things into departments, job descriptions, or SOPs. Data like this reinforces those illusions. It makes us believe that people use AI in neat boxes. In reality, those boxes don’t exist. Talking about AI usage like they do distorts reality by making our blind spots look legitimate.

Nobody performs work in clean little boxes. A single workflow is always a messy blend of activities scattered across the board. That’s the frustrating but wonderful human experience of work. Things are complex, nonlinear, and constantly shifting. When a chart reduces activity into neat categories like “writing,” “coding,” or “information-seeking,” it misses the essence of what makes work human. This is why examining AI adoption isn’t just technical. When we treat data about AI as a sterile task breakdown, we completely miss how AI is bleeding into the lived experience of how people navigate their day.

Think about your own use of AI for a moment. Do you really only use it for one thing? Of course not. That’s why we need to consider more broadly how AI is actually reshaping us.

“AI doesn’t just give bad answers; it gives confident ones. The danger is when we stop questioning them.”

Building on the last point, when you look at all the charts, they don’t just describe tasks. They tell a pretty concerning human story. The first chart, the one making the rounds on social media, shows where the majority of prompts fall. We see that people are largely asking for information and executing tasks. However, now combine this with the second chart, which digs into ‘how’ people engage (asking, doing, or expressing). When you do, it shines a light on something concerning. Almost nobody is “expressing.” Very little of the back-and-forth reasoning, challenging, or refining is happening. When you line the two charts up, it paints a picture of people asking AI for information and handing off execution, completely skipping the messy middle.

That gap is more than academic. It’s the erosion of critical thinking at scale. When people stop pausing to test or contextualize what AI gives them before execution, they aren’t just outsourcing tasks; they’re outsourcing judgment. Overconfidence is creeping in because AI’s responses are confident even when they’re wrong. Collaboration is shrinking because people lean on AI to “do it all themselves.” And now, the discipline of reflection and asking hard questions is atrophying. Data proves AI gives bad outputs, but people won’t recognize them because they’ve already stopped looking.

That’s the real story buried in the data. AI isn’t just accelerating work. It’s rapidly shaping how we think, and in some cases, whether we think at all.

“Adoption isn’t the win. Scaling bad habits faster only makes failure look like progress.”

One thing this study makes crystal clear is that adoption is well underway. Billions of prompts in a single week tell us AI has moved from early adopters to mainstream in record time. In some ways, that’s remarkable. Generative AI really only hit the global stage in November 2022, and in less than three years has embedded itself into everyday workflows at a pace no technology in history can match. That kind of acceleration is worth noting. However, there’s a catch. The vast majority of the focus I see is on celebrating adoption. Leaders are patting themselves on the back because their people are “using AI.” Far fewer recognize that’s where the risk amplifies.

We have to remember that adoption is not synonymous with meaningful progress. We live in a culture obsessed with “more” and “faster,” but that’s not inherently good. In fact, it can be catastrophic. When people start accelerating bad habits like outsourcing judgment, skipping collaboration, and chasing speed over quality, adoption at scale exponentially amplifies risks. You will see faster mistakes, bigger blind spots, and louder noise. That’s not effectiveness; that’s just motion. If we don’t define and measure what effective AI use looks like, adoption is little more than vanity metrics. And in the long run, those vanity metrics may disguise your failures as success.

That’s why this reflection matters most. Adoption is clearly inevitable, but effectiveness is not. If you don’t stop to define what good looks like, you may win the race to scale only to find you’ve been running in the wrong direction the entire time.

Concluding Thoughts

As always, thanks for sticking around to the end.

If what I shared today helped you see things more clearly, would you consider buying me a coffee or lunch to help keep it coming?

Also, if you or your organization would benefit from my help building the best path forward with AI, visit my website to learn more or pass it along to someone who would benefit.

As you sit with this reflection, I’d encourage you to think about how AI is integrating into your life. Are you paying attention where it’s happening? Do you understand how it connects to the entire schema? Do you have any flags in place to evaluate if it’s making you and your situation better? If you answered no to any of those questions, I’d encourage you to take some time to get them answered. If you don’t, you’re going to drift. And, I promise where that drift takes you won’t be ‘better.’

With that, I’ll see you on the other side.